Professor Philip L.H. Yu

Head and Professor

1. Background

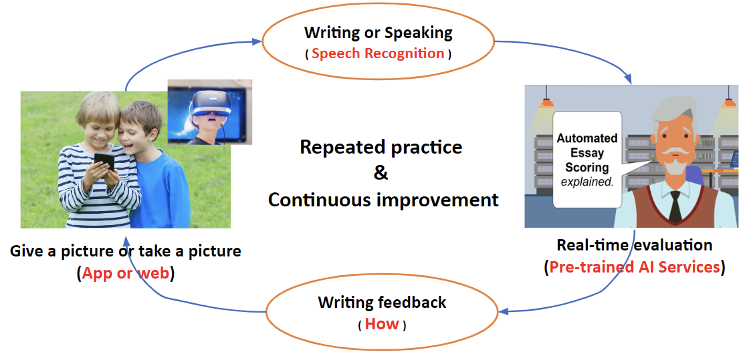

Autonomous learning outside the classroom is gaining increasing attention in worldwide, especially in the context of the coronavirus pandemic. For language learning, AI-driven writing assessment can facilitate autonomous learning activities effectively. As shown in Figure 1, by scoring student answers automatically, AI tools have the capability to provide immediate qualitative and quantitative feedback to students, thereby, reducing teacher workload and shortening the time needed for providing feedback to students.

At present, these tools are reshaping the way that people teach and learn language, especially through online courses and mobile apps that enable personalized autonomous learning. However, existing methods usually evaluated writing quality by extracting some language-related features via natural language processing. As a popular paradigm in language instruction and assessment, picture-cued writing is typically to ask students to describe a picture or pictures. For these tasks, the existing methods are unable to measure the link(s) between a visual picture and its textual description.

Figure 1. Language learning facilitated by automated assessment tools

2. AI-driven approach

To develop an automatic scoring system for picture-cued tasks, we proposed a novel approach by introducing the emerging cross-modal AI technology. The approach has the ability to grade student responses to picture-cued writing tasks in terms of content achievement and language quality.

(1) Estimating content achievement via cross-modal matching. The task is achieved with three encoders, i.e., an image encoder, a text encoder, and a multimodal encoder. Given an image and a text, the image encoder is often used to detect salient regions from the image and generate a visual representation for each region, the text encoder is used to parse the text and generated a contextualized textual representation for each word in the text, and the multimodal encoder is used lastly to estimate a visual-semantic similarity between the image and the text by matching the visual and the textual representations. The similarity was considered as indices to evaluate the content achievement of the response, i.e., measure how well the response describes the content of the picture.

(2) Evaluating language quality via natural language processing. Besides content, language quality is also important in writing assessments. Intuitively, the writings with more grammar errors or a lack of fluency should be assigned with lower scores. For the purpose, our approach evaluated the language quality of student writing with a group of indices, such aswords and their part-of-speech tags, the number of grammar errors and spelling errors, and sentence fluency and sentence structure, which were estimated by using some NLP techniques and deep learning-based language models.

3. AI scoring results

By investigating the agreement between human scores and AI scores on a same picture-cued writing task, which recruited nearly three hundred k12 students and asked them to write a sentence to describe each given picture, we found a small mean absolute error of 0.479 and a high adjacent-agreement rate of 90.64% for the 10-point test task, demonstrating an accurate scoring result. We believe this approach could reduce the subjective elements inherent in human-centered grading and save teachers’ time from the mundane task of grading to other valuable endeavors such as designing teaching plans based on AI-generated diagnostic information about students’ progress.

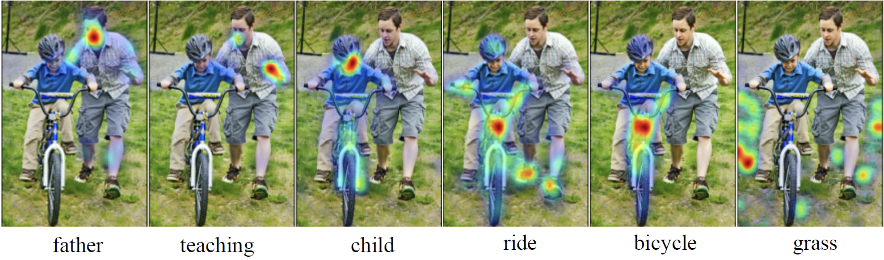

For an intuitive understanding, we presented an example for automatic picture-text matching results in our approach in Figure 2. For the response of “A father was patiently teaching his child to ride a bicycle on the grass”, which was written by a student for the picture, we illustrate the heatmaps on the picture corresponding six key words in the response. From the example, we can find that the AI model has matched visual areas to the target words reasonably after a cross-modal deep learning.

Figure 2. An example for image-text matching results generated by AI model automatically

4. Future work

To facilitate language learning in authentic situations, we aim to develop an intelligent system for students in primary and secondary schools in the future. Specifically, we will develop a mobile application to enable students to enrich and extend their language learning experiences in authentic settings through language-related daily life learning activities, and construct a set of efficient AI models to facilitate independent language learning by providing students with timely feedback and personalized support.

Please refer to the paper if you are interested in our approach: Zhao, R., Zhuang, Y., Zou, D., Xie, Q. and Yu, P.L.H. AI-assisted automated scoring of picture-cued writing tasks. To appear in Education and Information Technologies.